Ahna Girshick

Ahna Girshick explores the primal universal visual language that connects humans, machines, and nature. Her artwork has been exhibited at the Museum of Modern Art (NY), The Contemporary Jewish Museum (SF), and The Barbican Centre (London), Southern Exposure (SF), Ely Center for Contemporary Art (New Haven), 120710 (Berkeley), University of California School of Law (San Francisco), and White Columns registry (NY). She holds a PhD from UC Berkeley in Vision Science, and was a postdoctoral fellow at NYU. She lectures in UC Berkeley’s Cognitive Science program on shared illusions in human and machine perception, and has given public talks at The Commonwealth Club of California, Stanford Research Institute, The Internet Archive’s Intersection of Art and Technology salon, and other venues worldwide.

Hugh Leeman: You grew up at the confluence of art and science, your dad a scientist and your mom a painter. Could you share a story about a moment in your childhood when you first felt these two worlds come together in your imagination and how that has influenced your creativity?

Ahna Girshick: My mother is an abstract painter and my father a scientist/professor. As a child, I was surrounded by my mom’s canvases and the smell of oil paint. Like many kids, I aspired to grow up and be like my parents. But I didn’t know that I could combine art and science and technology; and I didn’t really see any models—at home or in school—for how they might come together.

I remember “discovering” geometric flower patterns with a pencil and compass and then layering watercolor on top, and also teaching myself origami from Japanese instructions I couldn’t even read. My maternal grandfather, Fazlollah Reza, had written books on the application of information theory to analyze Persian poetry, and I was intrigued by his idea of using math to capture the essence of a poem.

In high school, I interned at The Geometry Center, whose mission was to better understand the shape of space. I made a 4D “hypercube” out of pipe cleaners. One of my mentors crocheted a Klein bottle. We ate a cabbage leaf salad to learn about mathematical surfaces so naturally curved that

they can’t be flattened without tearing. Later, I interned at Xerox PARC’s information-visualization startup, where I first saw how code could generate entirely new forms of interactive visual tools.

Looking back, I feel lucky to have landed in those places during a moment when computers, information, and visuals were just beginning to weave together.

HL: Your academic research and art practice often focus on how machines see and understand our world and how that shapes technology and the world around us. How does your research in this area connect to your art practice?

AG: My interest in visual perception started around age four when my parents enrolled me in the childcare center of Stanford University’s Child Psych program.

As a subject in their research studies, I vividly recall the experimenter pouring a cup of water from one vessel to another differently-shaped vessel. Each time I was asked if it was a different amount of water – as it appeared – or the same amount – as my mental model told me.

Later, I realized that I could pursue a PhD in visual perception as a way to connect science to my interest in the visual world. It was definitely more science than art, but those years of research became the fuel for my art practice.

HL: You have published dozens of peer-reviewed papers that have accumulated thousands of citations. There was a moment during your PhD where you were encouraged to refrain from using the words “artificial intelligence” in your writing because it was seen as a sort of science fiction that would never take place. Can you talk about this moment during your PhD and how your decades of dedication to research on AI have given you a foresight on the new technology?

AG: My undergraduate research project in 1996 was to build a natural-language web interface. I programmed it in LISP. It was insanely crude by today’s ChatGPT standards. Programming and the web were so technical and cumbersome back then, and I was excited about the possibility of more natural ways for humans to talk with machines.

Then came the so-called “AI Winter,” when it became clear just how hard it was to make computers do even the simplest tasks. People stopped talking about AI altogether. By the time I was in grad school in the early 2000s, the focus was on “principled models” — computational models that could be interpreted in biologically meaningful terms. Machine learning was viewed with suspicion because it appears to work without subject matter expertise and it's incredibly opaque.

Machine learning has since proven its power, but it still suffers the “black box” suspicion – now it is both opaque for technical reasons and due to corporate secrecy. These opacities carry into my artwork: I am drawn to cracking open black boxes and translating them into a visible form.

HL: Tell me more about your collaborations with artists like Philip Glass and Björk?

(L) NYC: 73-78 , Interactive digital video, 2012, Nine stills from a 21-minute experience. Audio remix

AG: In the early 2010’s, touchscreens and apps were suddenly everywhere. In collaboration with my artist-husband, Scott Snibbe, and a team of other technical-creatives, we experimented with creating interactive experiences that explore music in new ways.

For Philip Glass’s 75th birthday, Beck produced a remix of Philip Glass music and we created an accompanying interactive experience, which was available on the Apple App Store and also exhibited internationally including at the Museum of Modern Art (NY), The Barbican Centre (London), and the Contemporary Jewish Museum (SF). In collaboration with our friend, Lukas Girling, the app allowed people to perform Philip Glass style music in their own way. During this time, I also had the opportunity to create a few animations for Björk’s Biophilia tour, to complement the app Scott produced with her. Now nearly everyone takes interactive touchscreen apps for granted. But at the time it was thrilling to be a small part of a creative experiment with technology, music, and interactivity, and have the endorsement of these musicians I admire so much.

HL: You’ve spoken about creating art that reveals what neural networks are doing “through a different lens.” How do you balance scientific accuracy with artistic interpretation when translating technical processes into visual or interactive experiences?

AG: I teeter on the edge of being just accurate enough to capture the essence of the science - and from there take creative liberties to achieve an artistic vision. If I start to worry about what my scientist colleagues and mentors would think by striving too much for technical accuracy, it stops feeling like art. But if the art betrays the science underlying it, then the scientist part of me feels like a con.

HL: In your Convolutions series you show viewers the first visual “building blocks” of AI. What was the story of your first encounter with these "building blocks", and how did you recognize their connection with the primal motifs of the human brain?

AG: One overcast day in high school, I had just finished a run when I noticed fine lines floating over the clouds - very subtle, but clearly not an atmospheric phenomenon - and soon I realized they weren’t even real. It was a fleeting experience but one that sometimes recurred on overcast days. I never mentioned these to my vision science professors, lest I be laughed at. Later, I learned these visions are probably “phosphenes”, first noticed by the Greeks and likely stemming from spontaneously induced activity in the eye or visual cortex. Phosphenes are still not well understood, but I think of them as glimpses of neurons’ preferred patterns bubbling up into our awareness.

AG: In grad school, I learned more about the preferred patterns of vision neurons in humans and mammals, often characterized by sinusoidal stripes called Gabors. One of my professors, Bruno Olshausen, fed photos into an early machine vision system and discovered that the artificial neurons preferred strikingly similar patterns to Gabors. So much of AI feels scary or irrelevant to me, so I was struck by this similarity with human perception; that there might be a hidden visual grammar shared by humans, mammals, and machines. We’re obviously different, but I came to understand that all visual learning systems reduce their vast training data to a small number of reusable building blocks – a visual grammar.

As neural networks have dramatically improved along with visualization tools, it has become increasingly possible for me to use code to peer under the hood of these systems and see this visual grammar. The job of this internal visual grammar is to produce our perceptions (or AI perceptions). Said another way, our visual perception is dictated by its grammar of neuron preferences.

I started thinking about the units in the grammar as technological or scientific specimens in the 16th-century European tradition of Kunstkammern. After all the work of excavating this visual grammar, I wanted to preserve it in a specimen collection, capturing pivotal moments in AI history when new models irreversibly shaped society’s relationship with technology. My Convolutions series embeds these specimens into “cabinets of curiosity” that bring the machine’s square gridded memory and logic into tension with its new ability to learn organically shaped forms.

See Through Machine, XI , Kiln-fused glass, custom machine learning software. 24” x 18”. 2025 With detail

See Through Machine, X , Kiln-fused glass, custom machine learning software. 24” x 18”. 2025 With detail

HL: What is your interest in working with glass?

AG: Glass has a deep history as a medium for filtering vision, going back to the invention of glassblowing by 1st century Romans; the 12th century innovations of stained-glass cathedral windows and eyeglasses; and the 17th century invention of the telescope.

Colored light is the medium of perception itself, now employed as a medium by contemporary artists such as James Turrell and Olafur Eliasson. The history of art is intertwined with the science of seeing: As far back as the earliest cave paintings, humans were trying to depict their inner perceptions, including what appear to be phosphenes and other primal perceptual phenomena.

All this got me interested in creating a perceptual experience in which we could explicitly see through to the inner grammar of an AI model. I made a series of glass windows called See Through Machines – when you look through them you have the experience of looking through the “lens of AI”.

HL: With Bias Reflectors, you invite viewers to see themselves entangled with machine-learned biases. Beyond what we often hear about AI bias, can you add some context to what this means and talk about your personal experiences or realizations that led you to create artwork that makes these unseen forces in our lives visible and intimate?

AG: Even though bias has a very precise technical definition, it is, rightly, a charged term in today’s culture, associated with negative societal ramifications. Most people know bias has a negative connotation, but do not know what bias is and where it comes from. Bias is actually the flip side of an optimized perceptual system that evolved for survival, not accuracy. One can think of the simplest bias as a neuron’s preference for certain signals. I wanted to depict this earliest form of visual bias – humble origins. I put the biases on the surface of a mirror so that the viewer’s face would become unavoidably tangled with it, because there is no way we can look without our own biases.

HL: In Semantic Segmentation, you reference Bruegel’s artwork, The Harvesters, through AI perception. How did you come to see this piece as a bridge between 16th-century perspective and neural networks today?

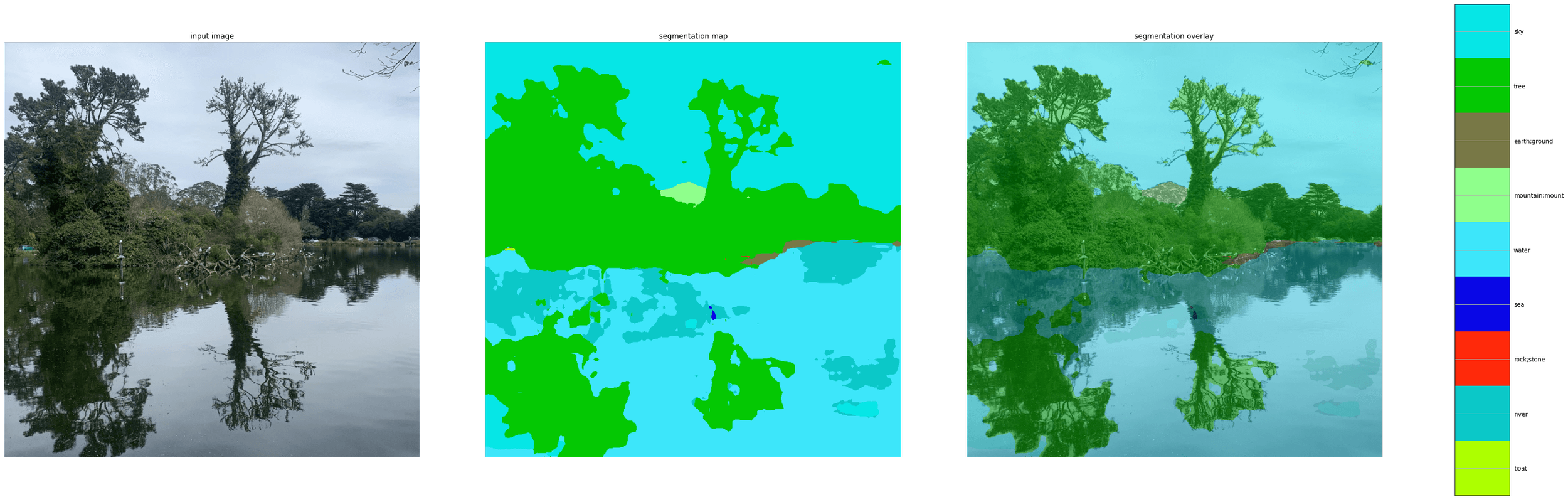

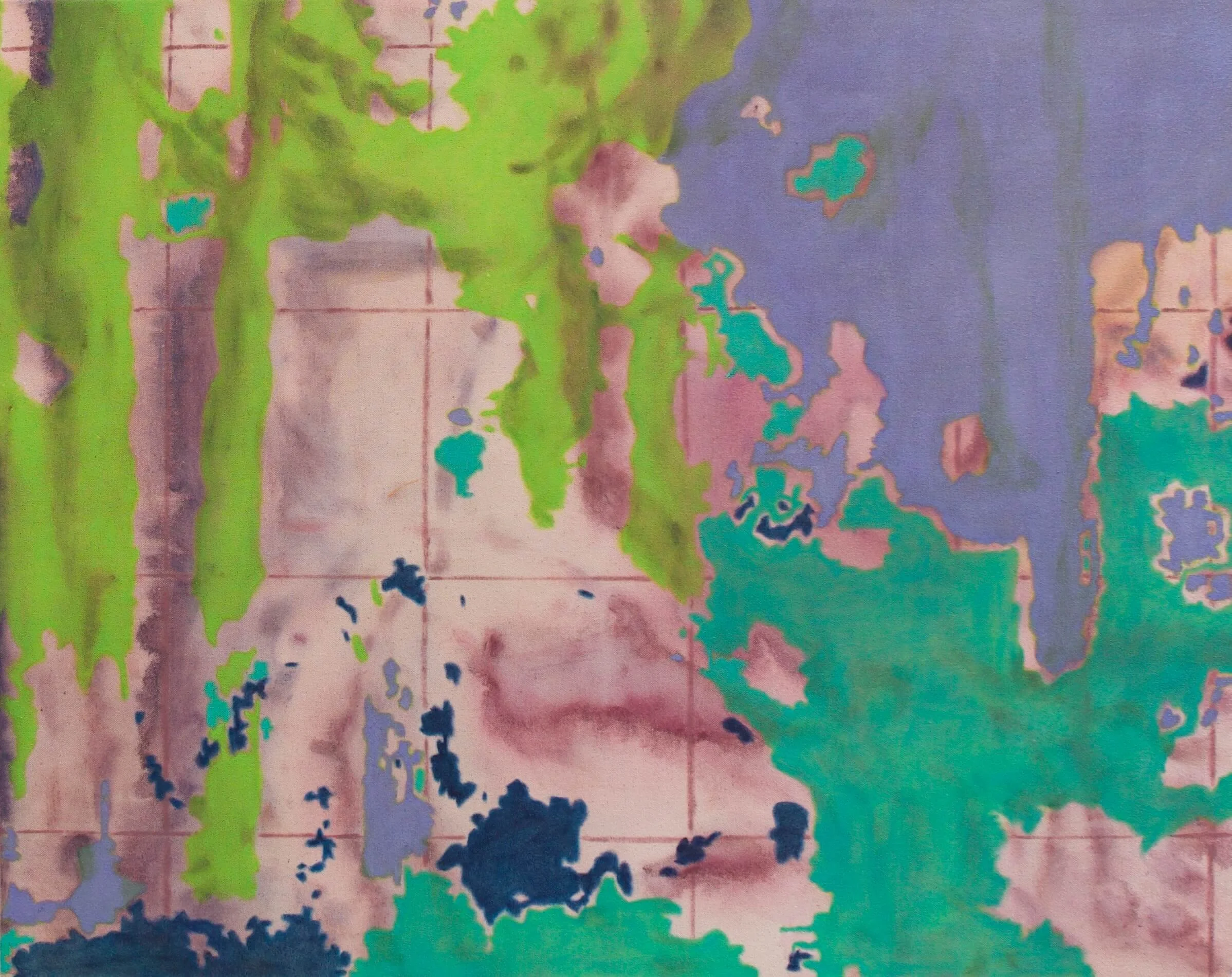

AG: I am drawn to a particular machine perception algorithm called semantic segmentation because it speaks to how painters have historically interpreted their 3D environment and projected it onto 2D canvases. In this algorithm, a machine is fed a 2D photograph and then infers the 3D objects within it, outputting a color-coded map with a legend of labeled regions – tree, plants, sky, people, etc. The shapes of the objects in the output maps are often unrecognizable and the assigned labels quite limited. I’m reminded of my high school drawing classes in which I was told to squint at a coffee cup to see its foreshortened contours projected onto my retina, and to try and suppress my brain’s 3D interpretation and any prior knowledge about “coffee cups.”

My use of Bruegel’s 1565 painting The Harvesters was originally just a test image for the algorithm. Yet it is one of the most widely-studied paintings of all time – The Met has called it the “the first modern landscape painting.” I had so many segmentation maps of this painting before I realized that the semantic segmentation algorithm is technology that maps from 2D pixels to 3D meaning – the inverse of Bruegel’s use of the technology of his day – linear perspective, translates from 3D meaning to 2D pixels.

I painted the algorithm’s output legend on the back of each canvas for posterity... its visibility is obscured just the way everything about AI’s internal working feels obscured!

HL: Your process involves painting by hand after the AI has mapped contours and objects. Could you describe the story of what it feels like to embody the AI’s vision and where you sense the boundary between its vision and your own?

AG: These days there is a glut of AI-generated imagery that just feels “dead”. I’m less interested in those tools, but I am very interested in these AIs’ perceptual processes and underlying technology. What assumptions do those tools make? What do they say about our own perception? I want my ideas processed through my human brain, or my human hand. All of my work starts with an idea, often a photo, then an algorithm, coding, then back to something material. In the last stage I try to discard many of the technical details that got me there and respond to the canvas, because in the end, a viewer will connect best to something human-generated.

(Above) Forest Vision , Geography , Oil and acrylic on canvas. 24"x30". 2023 , With detail of algorithmic process and painted legend on back of canvas

HL: Your series Edge Detectors in the Wild speaks of landscape imagery and an uncontrolled deployment of AI. Could you tell the story of how you chose this title, and what it means to you in light of today’s unpredictable AI landscape?

AG: The very first neurons in the brain to process visual information look for edges, and hence are called “edge detectors”. This first unconscious contact of light and visual neurons, before we recognize objects and make judgments, is related to the Buddhist idea of sparśa. This initial sensitivity to edges also emerges spontaneously when AIs are trained to understand images. It turns out that all visual learning systems aim to reduce their training data to a small set of reusable units – their visual grammar – which starts with edge detectors.

When learning systems – biological or digital – are trained and tested, it is always with data limitations. When AI systems go outside their training data – called in “the wild” – reality is perceived in unpredictable ways. This poses some dangers. Of course, when I go out of my comfort zone, I too am unpredictable! “In the wild” is a machine learning term, but I also liked it because it connects us back to wilderness, the original training data for humans.

(Above) Two stills from: Machine Seeing Belgum Trail Woods , 4K video, 9:53 min, custom machine learning software, sound. 2025

https://www.lightdark.org/art/machine-seeing-nature

Hugh Leeman: Create a spectrum of the future, what is your greatest sense of optimism around AI and

the arts and your greatest concern?

Ahna Girshick: As tech increases around us, I take greater joy in the non-tech moments: human intimacy, time in nature, the creative process, quiet, doing things by hand. These are needs we evolved with. Now, when I am out in nature, I am thinking about early humans out in nature. I feel wary of the idea of AI permeating and disrupting this human-nature intimacy which connects us back to humans hundreds of thousands of years ago. This was on my mind in my Machine Seeing Nature series. I tried to think about simulating the internal perceptions of AIs as they viewed nature, while putting AI’s visual grammar back in its natural home.

The best future uses of AI may be those that aid these very human needs of human connection, engagement, creativity, connection to nature. Of course the dominant market forces on AI don’t prioritize any of these subtleties, but on a good day I hope they may emerge and spread through the new medium of AI, because humans are wired towards these qualities.